Scaling connections with Ruby and MongoDB

By Michael de Hoog

Coinbase was launched 8 years ago as a Ruby on Rails app using MongoDB as its primary data store. Today, the primary paved-road language at Coinbase is Golang, but we continue to run and maintain the original Rails monolith, deployed at large scale with data stored across many MongoDB clusters.

This blog post outlines some scaling issues connecting from a Rails app to MongoDB, and how a recent change to our database connection management solved some of these issues.

Global VM Lock

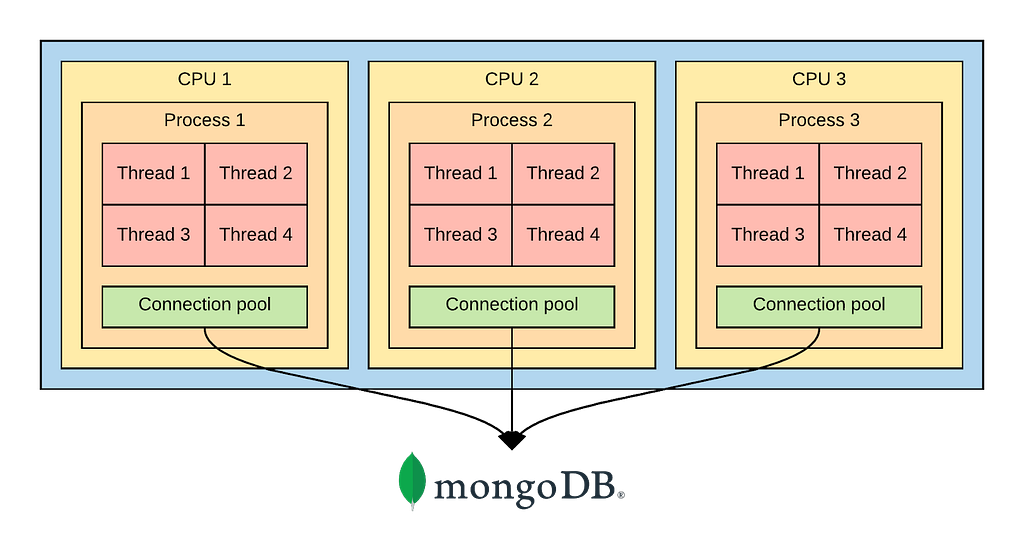

At Coinbase we run our Ruby applications using CRuby (aka Ruby MRI). CRuby uses a Global VM Lock (GVL) to synchronize threads so that only a single thread can execute at once. This means a single Ruby process can only ever use a single CPU core at once, whether it runs a single thread or 100 threads.

Coinbase.com runs on machines with a large number of CPU cores. To fully utilize these cores, we spin up many CRuby processes, using a load-balancing parent process that allocates work across these child processes. In the application layer, it’s hard to share database connections between these processes, so instead each process has its own MongoDB connection pool that is shared by that process’ threads. This means each machine has 10–20K of outgoing connections to our MongoDB clusters.

Blue-green deploys

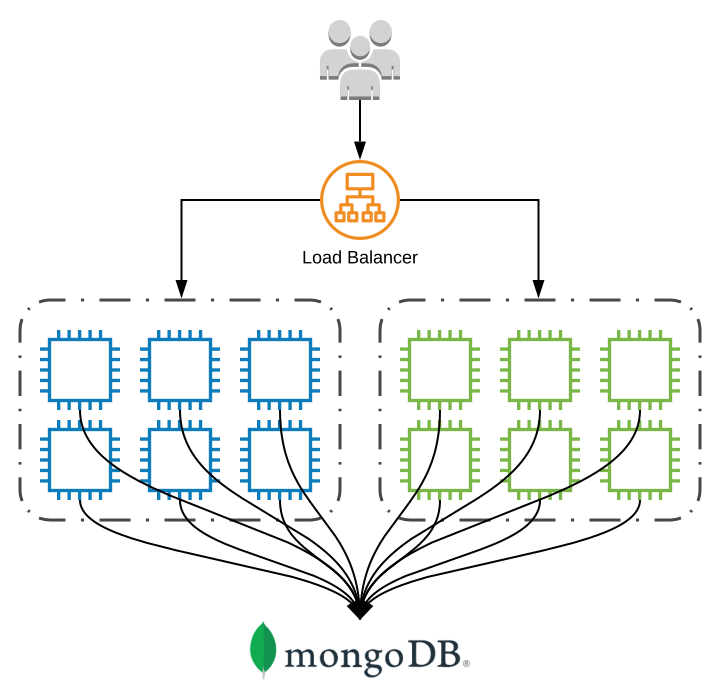

Maintaining product velocity is essential at Coinbase. We deploy to production hundreds of times a day across our fleet. In the general case we use blue-green deployments, spinning up a new set of instances for each deploy, waiting for these instances to report healthy, before shutting down the instances from the previous deploy.

This blue-green deploy approach means we have 2x the count of server instances during these deploys. It also means 2x the count of connections to MongoDB.

Connection storms

The large count of connections from each instance, combined with the amount of instances being created during deploys, leads to our application opening tens of thousands of connections to each MongoDB cluster. Deploying during high traffic periods, when our application is auto-scaled up to handle incoming traffic, we would see spikes of almost 60K connections in a single minute, or 1K per second.

Hoping to reduce some of this connection load on the database, in March we modified our deployment topology, introducing a routing layer designed to transfer this load from the `mongod` core database process to a `mongos` shard router process. Unfortunately the connections were similarly affecting the `mongos` process and didn’t resolve the problem.

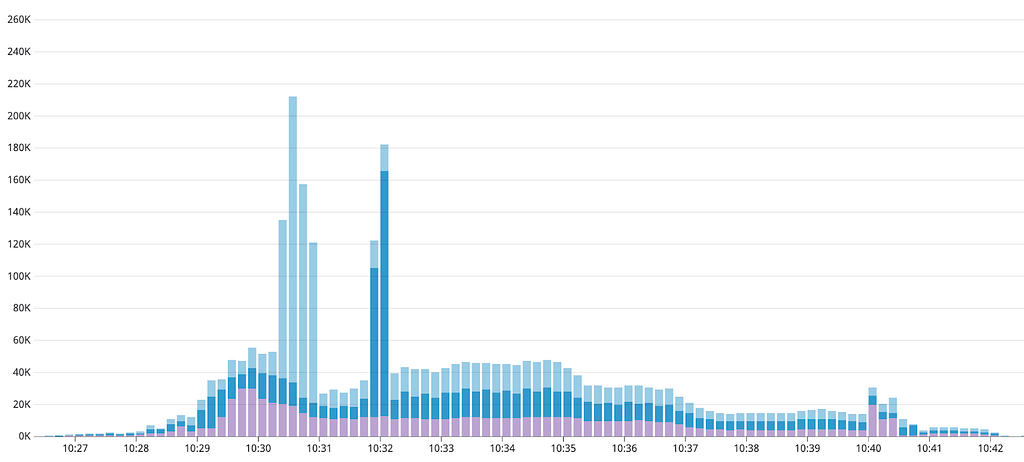

We experienced various failure modes from these connection counts, including an unfortunate interaction where the Ruby driver could cause a connection storm on an already degraded database (this has since been fixed). This was seen during a prolonged incident in April, as described in this Post Mortem, where we saw connection attempts above MongoDB’s 128K maximum to a single host.

Proxying connections

The vast amount of connections from our Rails application is the root problem; we had to focus on reducing these. Analyzing the total time spent querying MongoDB demonstrated these connections went mostly unused; the application could serve the same amount of traffic with 5% of the current connection count. The obvious solution was some form of external connection pooling, similar to PgBouncer for PostgreSQL. While there was prior art, there was no currently supported solution for connection pooling for MongoDB.

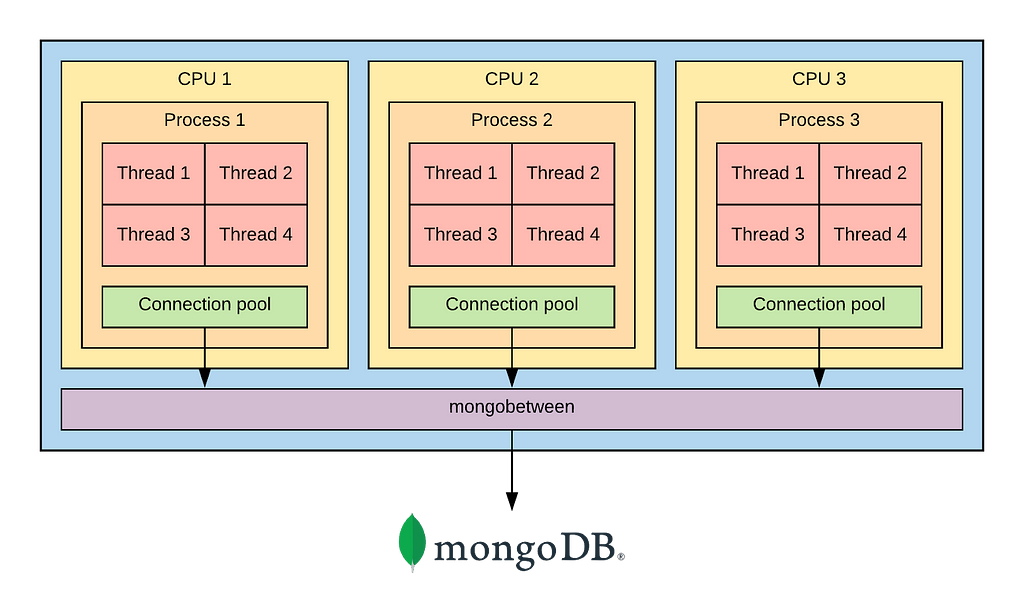

We decided to prototype our own MongoDB connection proxy, which we call `mongobetween`. The requirements were simple: small + fast, with minimal complexity and state management. We wanted to avoid having to introduce a new layer in Rails, and didn’t want to reimplement MongoDB’s wire protocol.

`mongobetween` is written in Golang, and is designed to run as a sidecar alongside any application having trouble managing its own MongoDB connection count. It multiplexes the connections from the application across a small connection pool managed by the Golang MongoDB driver. It manages a small amount of state: a MongoDB cursorID -> server map, which it stores in an in-memory LRU cache.

Results

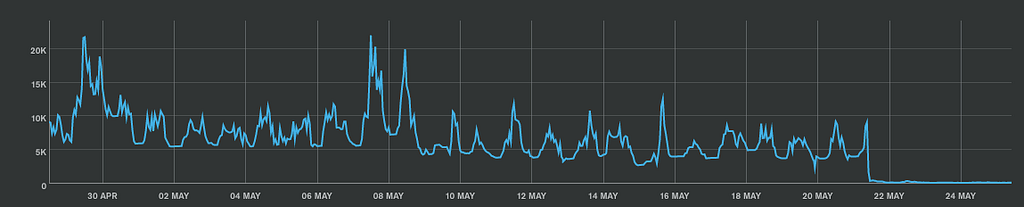

Since rolling out the connection proxy, we’ve dramatically reduced the overall count of outgoing connections to MongoDB, by around 20x. Deploy connection spikes which used to hit 30K now hit 1.5K connections. The application steady state, which used to require 10K connections per MongoDB router, now only needs 200–300 connections total:

Open source

Today we’re announcing that we are open-sourcing the MongoDB connection proxy at github.com/coinbase/mongobetween. We would love to hear from you if you are experiencing similar MongoDB connection storm issues and would like to chat about our solution. If you’re interested in working on challenging availability problems and building the future of the cryptoeconomy, come join us.

This website contains links to third-party websites or other content for information purposes only (“Third-Party Sites”). The Third-Party Sites are not under the control of Coinbase, Inc., and its affiliates (“Coinbase”), and Coinbase is not responsible for the content of any Third-Party Site, including without limitation any link contained in a Third-Party Site, or any changes or updates to a Third-Party Site. Coinbase is not responsible for webcasting or any other form of transmission received from any Third-Party Site. Coinbase is providing these links to you only as a convenience, and the inclusion of any link does not imply endorsement, approval or recommendation by Coinbase of the site or any association with its operators.

All images provided herein are by Coinbase. All trademarks are property of their respective owners.

Scaling connections with Ruby and MongoDB was originally published in The Coinbase Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.