Building state-of-the-art machine learning technology with efficient execution for the crypto…

Building state-of-the-art machine learning technology with efficient execution for the crypto economy

By Catalin-Stefan Tiseanu

Introduction

At Coinbase we’re using machine learning for a variety of use-cases, from preventing fraud and keeping our users safe, to curating and personalizing content or detecting whether identities are matching or not.

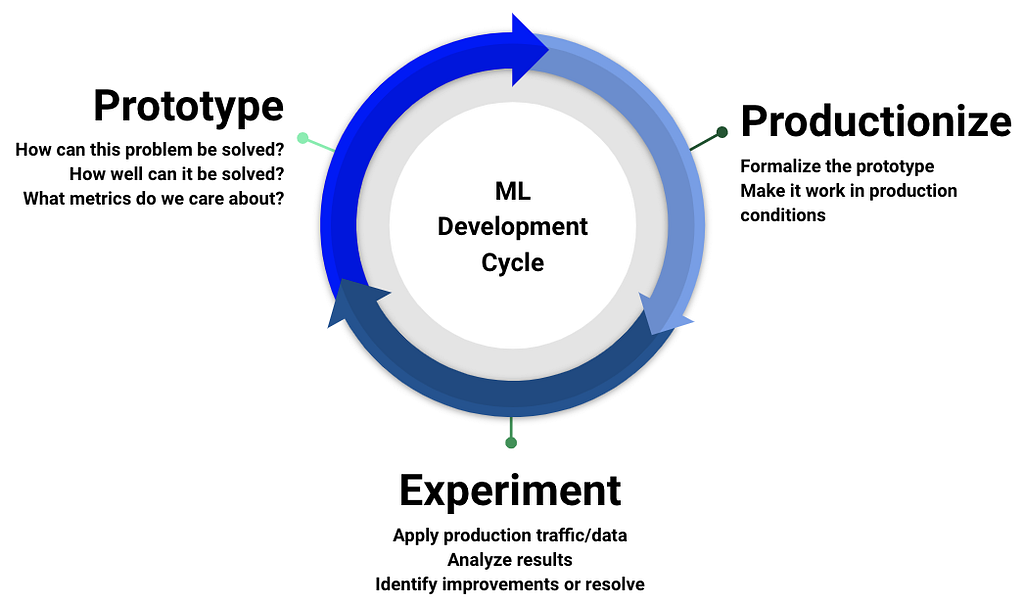

One of the critical differences between machine learning projects and other types of software engineering is that it’s hard to forecast the impact of a machine learning model before actually trying a prototype out. That’s why getting to an initial prototype and having a fast iteration cycle is critical for machine learning projects.

With this in mind, we set out to build a suite of machine learning models which balance the tradeoff between quick iteration cycles and incorporating new state-of-the-art practices. In this blogpost, we’ll detail the ML systems we built to enable anyone in the company to quickly build high performing ML models: EasyML, Seq2Win and EasyBlend.

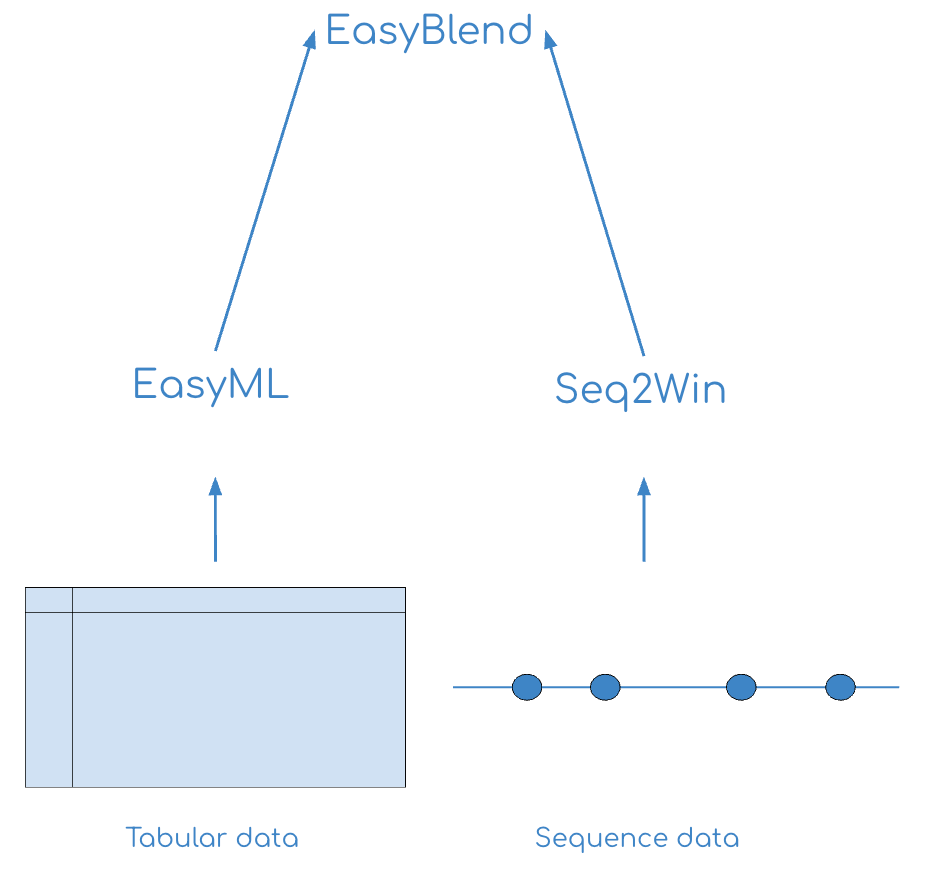

EasyML enables building models on tabular data, e.g. data which consists of multiple types of features, such as numerical (user age on site), categorical (user city) or dates (last transaction date) — ‘spreadsheet data’. Seq2Win enables building models which use sequential data, e.g. sequences of front-end user events. EasyBlend enables us to combine multiple models together. Taken together, these 3 frameworks can be used to build machine learning models for brand new use cases, in just a few hours.

This is how it all fits together:

EasyML

The linchpin of our ML model framework is EasyML. EasyML is an AutoML framework that specializes in quickly building high performance models for tasks using tabular data, e.g. models for which the data is returned from an SQL query or a CSV file (e.g. risk models, personalization models, news ranking, etc). Almost all of the ML models we use in production at Coinbase today inherit from EasyML.

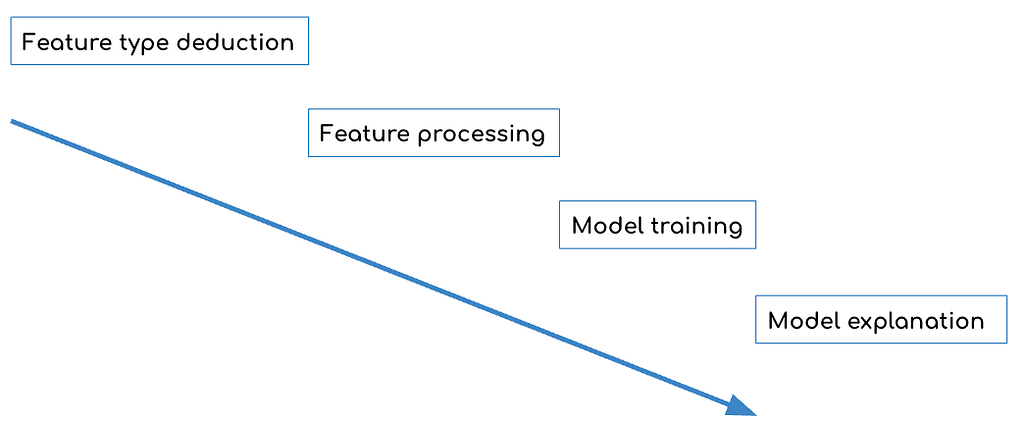

This is what model development using EasyML looks like:

- Feature type deduction — features are automatically categorized according by type: numerical, categorical or dates.

- Feature processing — transforms are applied according to the feature type: bucketization, differences between dates, date specific features such as day/hours of week, etc. Processing is done in parallel, leveraging all available resources.

- Model training — EasyML supports a wide variety of modeling approaches, with best practices baked in. Currently Linear models, gradient boosting trees, and deep learning models are supported. EasyML also supports multi-gpu training out of the box.

- Model explanation — Explainability is top of mind for our critical models, so EasyML provides both global feature importance, and per-sample analysis via SHAPley values.

The EasyML development workflow provides several strategic advantages:

- Training and serving workflows for most ML models can be unified into one package, assuring better testing and performance.

- Models can be developed faster and with lower complexity by just inheriting from EasyML and writing less than 20 lines of custom Python code.

- Improvements to EasyML, in terms of feature processing, model training, or explainability will automatically be inherited by all models using it.

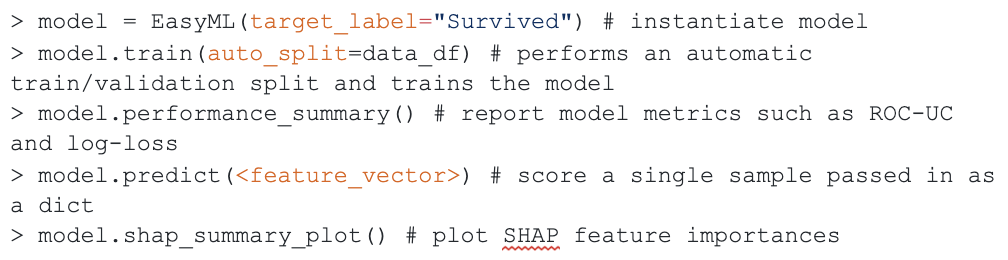

Here is an example of EasyML in action for the Titanic dataset from Kaggle:

Seq2Win

As a complement to EasyML, Seq2Win uses a state-of-the-art deep learning model, based on Transformer architecture, to operate on sequences of user events (for example, front-end and back-end events). This enables true user intent modeling by getting a real-time pulse of what the user intends to do based on their most recent events.

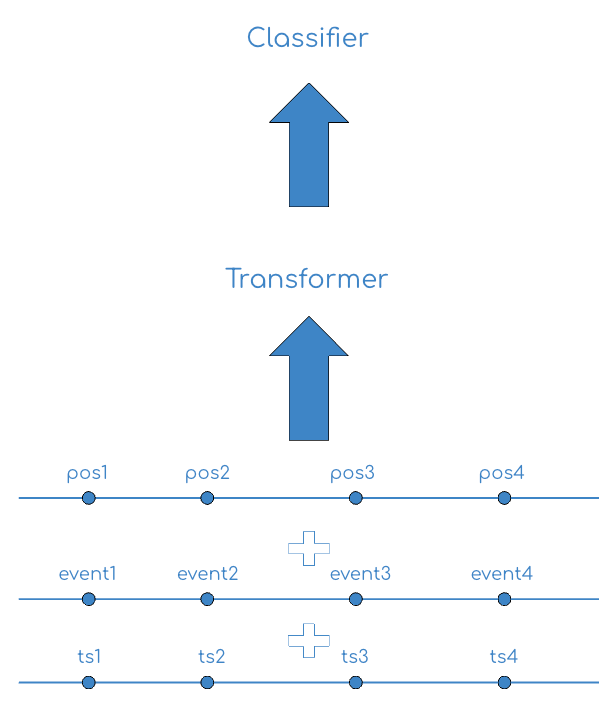

Here is how Seq2Win works:

Given a sequence of user events event1, event2, event3, event4, … and the sequence of the associated timestamps of those events ts1, ts2, ts3, ts4, …, we convert both sequences to categoricals (trivial for events, using bucketization for differences of consecutive timestamps). We’re then left with two sequences of categoricals which can be thought of as sequences of tokens (to use the NLP models for which Transformers are best known). We create embeddings for both the event and bucketized timestamp difference, add them to together, and then combine that with a learned positional embedding. This sum is then the input for the first encoder layer.

In terms of the Transformer architecture, we used 256 for our sequence length, wide multi-head attention with 8 heads and 4 encoder layers (no decoder layers).

The strategic advantage of Seq2Win is that its underlying data source (user events) is unrelated to any particular domain. The ML team at Coinbase has used it as a successful sub-model for both fraud prevention as well as personalizing the asset buying experience for the user, representing two distinct business domains. In the near term, to unlock the full power of the model, the ML team plans to pretrain Seq2Win on the events of all users at Coinbase, as well as using more recent Transformer architectures.

EasyBlend

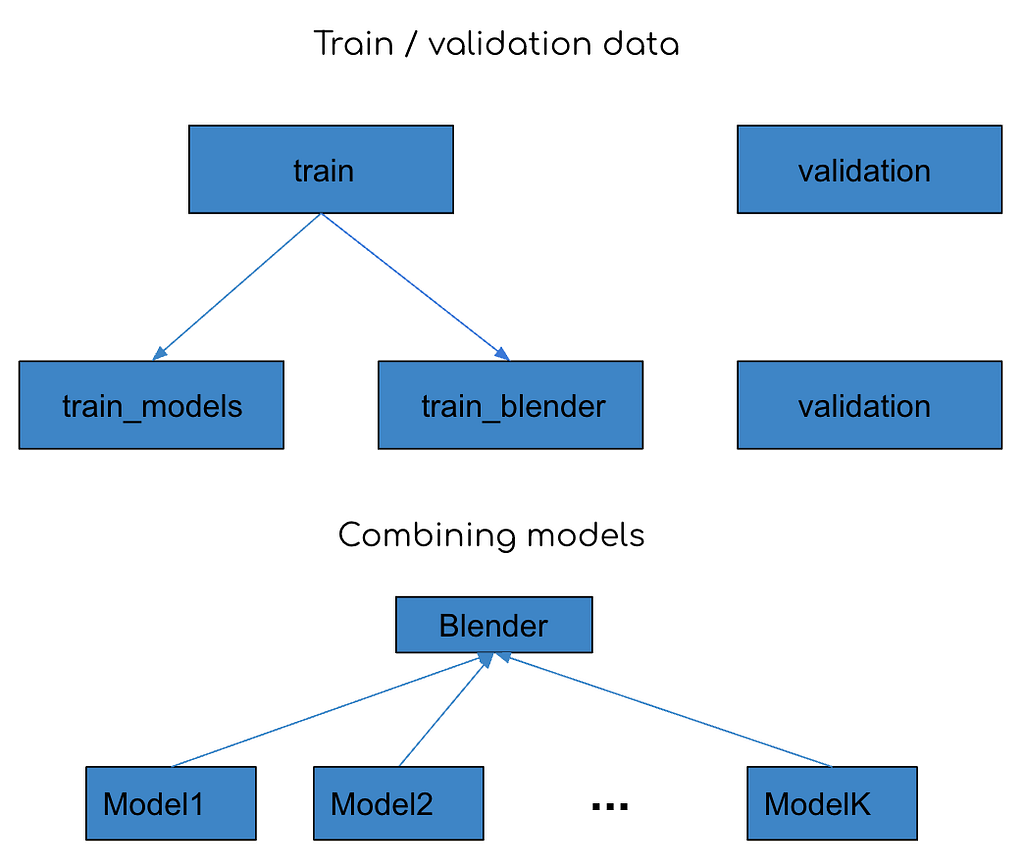

EasyBlend was built to allow ML engineers to easily combine tabular EasyML models and sequence Seq2Win models, as these two models are different in terms of both architecture and input features. EasyBlend can train multiple types of models under the hood and combine their results by using linear blending. It can be thought of as a two-layer approach where the models which need to be combined sit in the first layer, and the blender model sits in the second layer.

Specifically, given train and validation datasets, EasyBlend first splits train into train_models and train_blender. It trains the models on train_models and scores train_blender and validation. It then trains the blender model (usually a linear model such as Linear or Logistic Regression) on the model scores from the previous step on train_blender and validates on validation (which also has the model scores per the previous step).

The final result is a quick and easy way to combine different models together, while also keeping the end results easily explainable, since the blender models are linear.

Impact

With EasyML, Seq2Win, and EasyBlend playing key contributor roles, we have driven higher net revenue and reduced fraud rates while significantly reducing friction for users. On the machine learning side, we have achieved +10% ROC-AUC gains in many of our models.

Finally, we have achieved a quick iteration cycle where everyone on the team, along with others within the company who know Python, are able to assemble a training and validation dataset and can build an initial ML model in minutes instead of the days it took before.

Conclusion

By having these building blocks, ML engineers at Coinbase can quickly build state of the art models, without sacrificing either performance or iteration speed. This has led to meaningful business impact, higher productivity by ML engineers, and faster iteration times. Going forward, we’re excited about improving on these building blocks with pre-training Seq2Win and using Graph Neural Networks for handling blockchain data.

If you are interested in technical challenges like this, Coinbase is hiring.

Acknowledgements: The author would like to thank the following people for their contributions: Michael Li, Erik Reppel, Vik Scoggins and Burkay Gur.

This website contains links to third-party websites or other content for information purposes only (“Third-Party Sites”). The Third-Party Sites are not under the control of Coinbase, Inc., and its affiliates (“Coinbase”), and Coinbase is not responsible for the content of any Third-Party Site, including without limitation any link contained in a Third-Party Site, or any changes or updates to a Third-Party Site. Coinbase is not responsible for webcasting or any other form of transmission received from any Third-Party Site. Coinbase is providing these links to you only as a convenience, and the inclusion of any link does not imply endorsement, approval or recommendation by Coinbase of the site or any association with its operators.

Unless otherwise noted, all images provided herein are by Coinbase.

Building state-of-the-art machine learning technology with efficient execution for the crypto… was originally published in The Coinbase Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.